Research overview

In the last fifty years, digital VLSI circuits with von-Neumann architecture dominates the development of computer hardware. It is because, as predicted by the famous Moore's law, number of transistors that could be integrated on a chip could double every 18 months, which leads to the exponential growth of chip performance and efficiency, but leaves other approaches little room to compete or even survive. However, after fifty years of glory, Moore's law is finally facing its end, not only because transistor will approach the 5 nm limitation due to quantantun effect, but also because unaffordable high power density due to high device density.

As one of the many research teams looking for the next Moore's law, our group is exploring with great enthusiasm for emerging computing systems, including the modeling of emerging devices (memristor, RRAM), brain-inspired computing systems and its applications in machine learning and Internet of Things (IoT). One focus is on exploring revolutionary brain-inspired hybrid computing systems by leveraging emerging devices and structures. End of Moore's law infers opportunities for Non-von Neumann structures, and our research group will continue and expand its research path to pursue the next and future generations of computing systems, which is believed to be brain-inspired, hybrid, heterogeneous and application specific.

On-going research directions:

Hybrid accelerators for neural networks

Software/hardware co-design for machine learning

Low power AI IoT system

Bio-electronics

Highlighted research projects

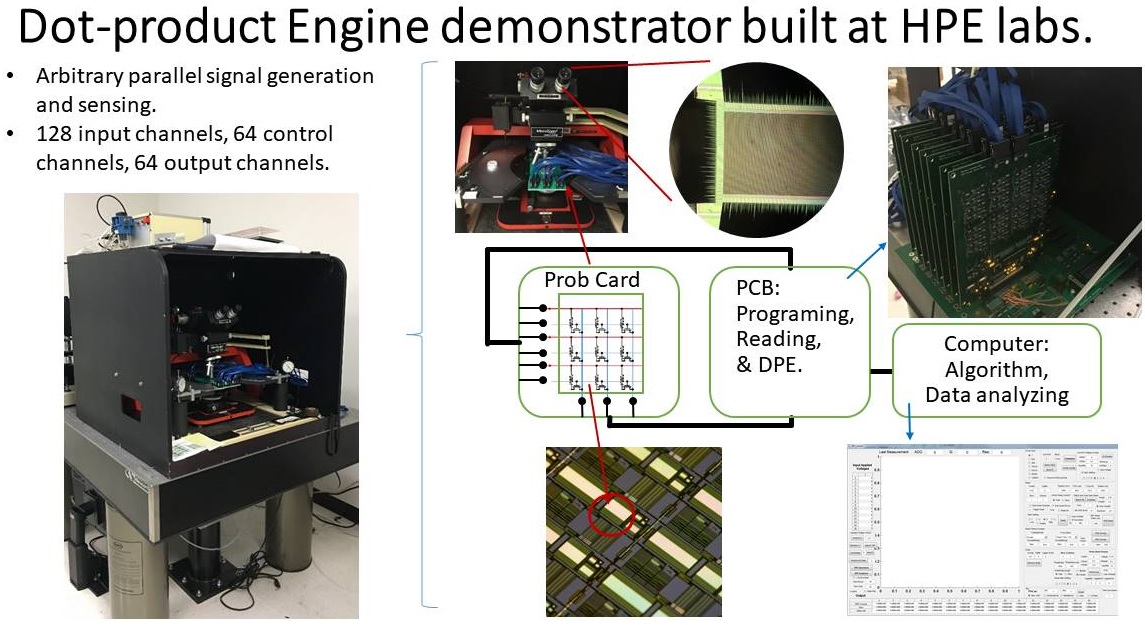

Analog computing accelerator: Dot-Product Engine (DPE)

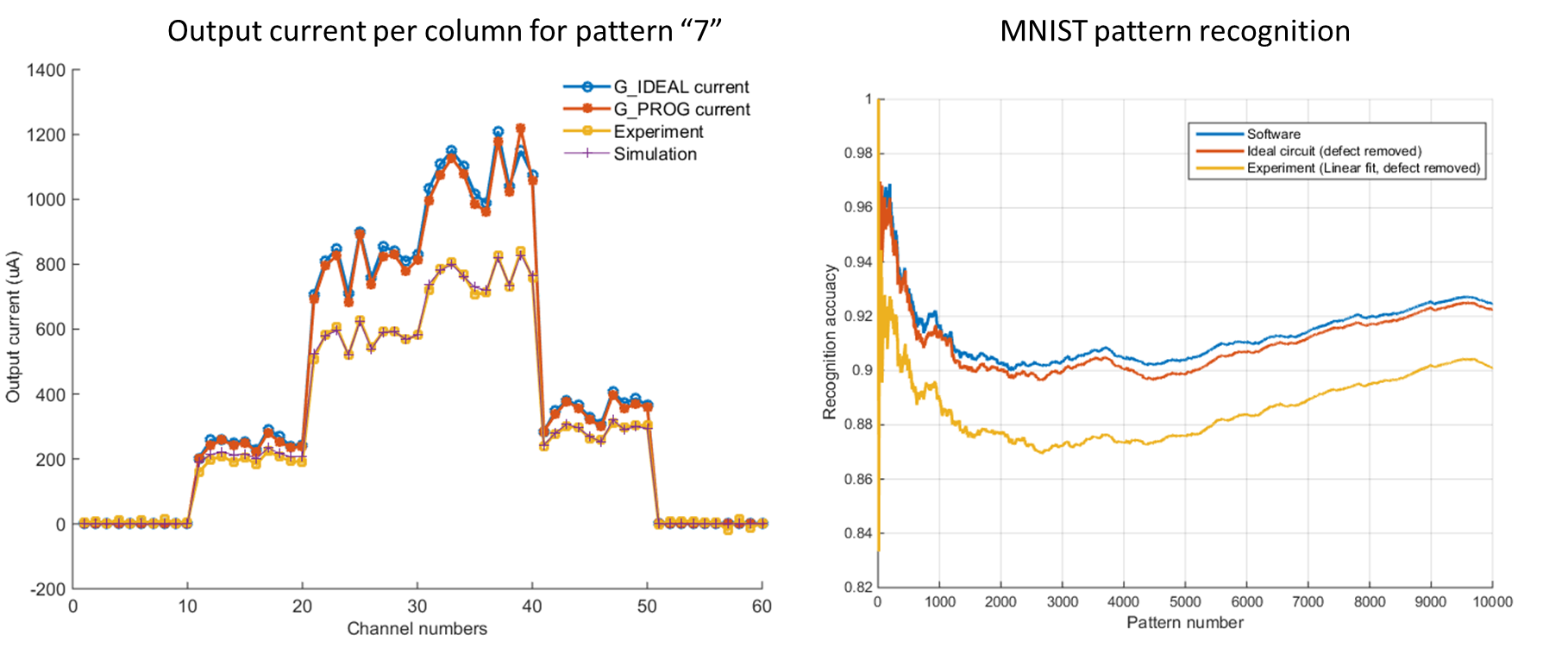

Dot-Product Engine (DPE) is Dr. Miao Hu's major research project in Hewlett Packard Labs from 2014 to 2017. It is an experimental testing platform to program and doing parallel analog computation with large-scale memristor crossbar arrays. DPE is supported by the Intelligence Advanced Research Projects Agency (IARPA) aiming for ultra-high throughput and power efficient accelerators. The machine can precisely tune each memristor device to its target conductance, and do more than eight thousands of multiplications per time step in a tiny 128x64 memristor crossbar, where computation and storage happen at the same cross-point devices via current accumulation and device conductance, respectively. We also demonstrated ~90% MNIST pattern recognition accuracy and image compression/de-compression with the same 64x64 array. Two publications have been made in Advanced Material and Nature Electronics, which firstly demonstrate pure analog in-memory vector matrix multiplications with memristor crossbar arrays and its applications in machine learning and image processing, respectively.

Neural network implementation on memristor crossbar

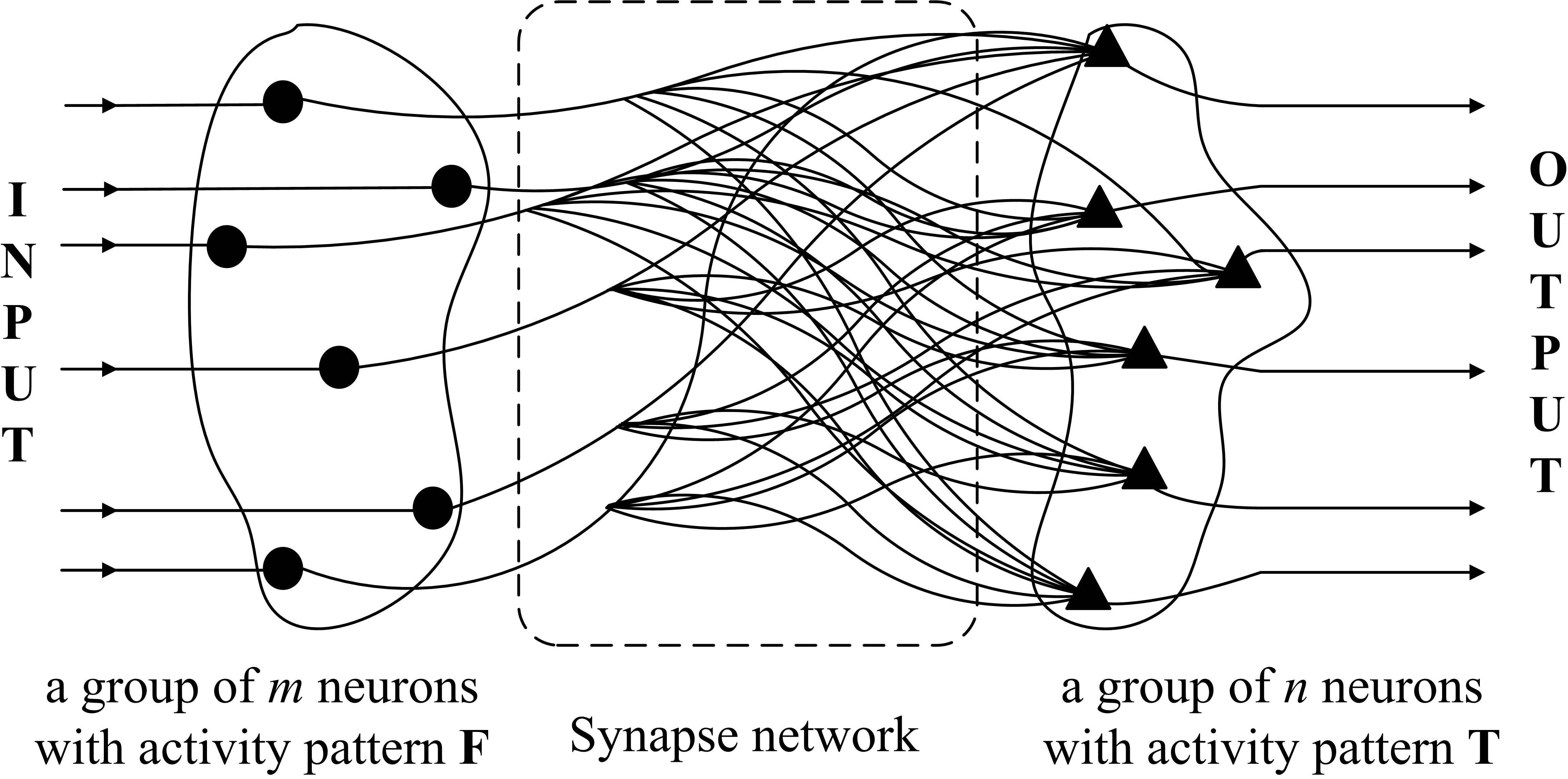

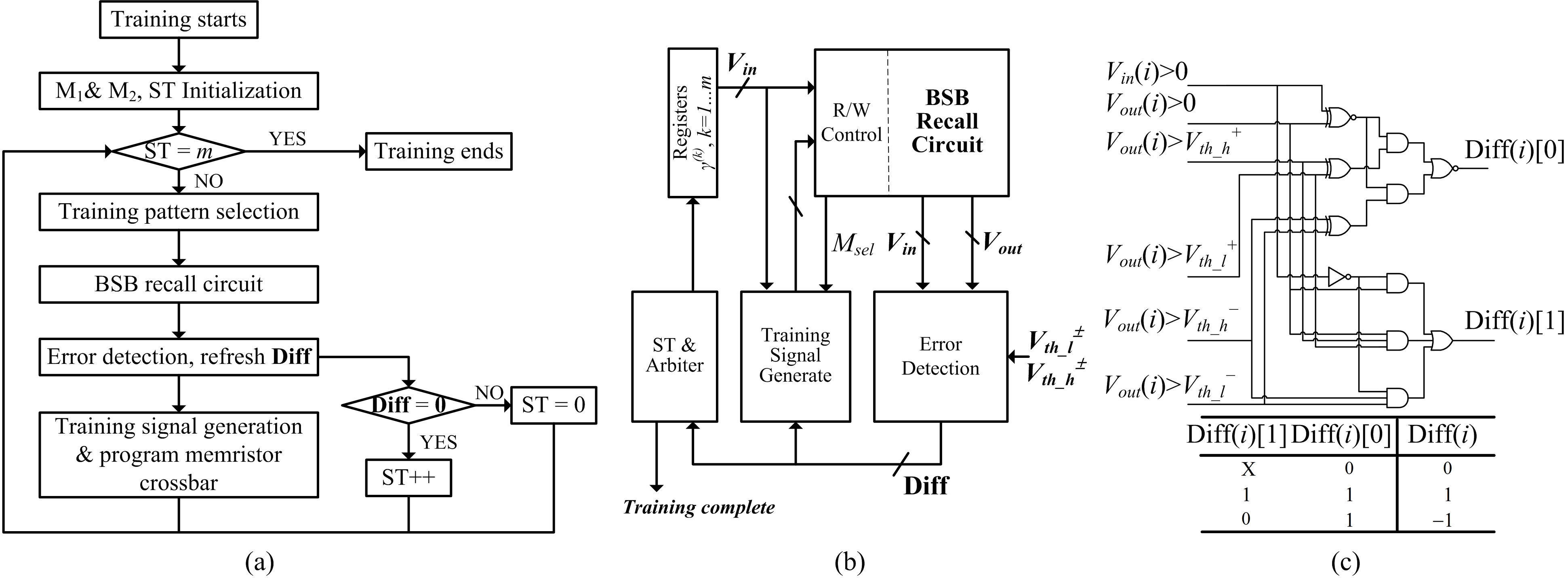

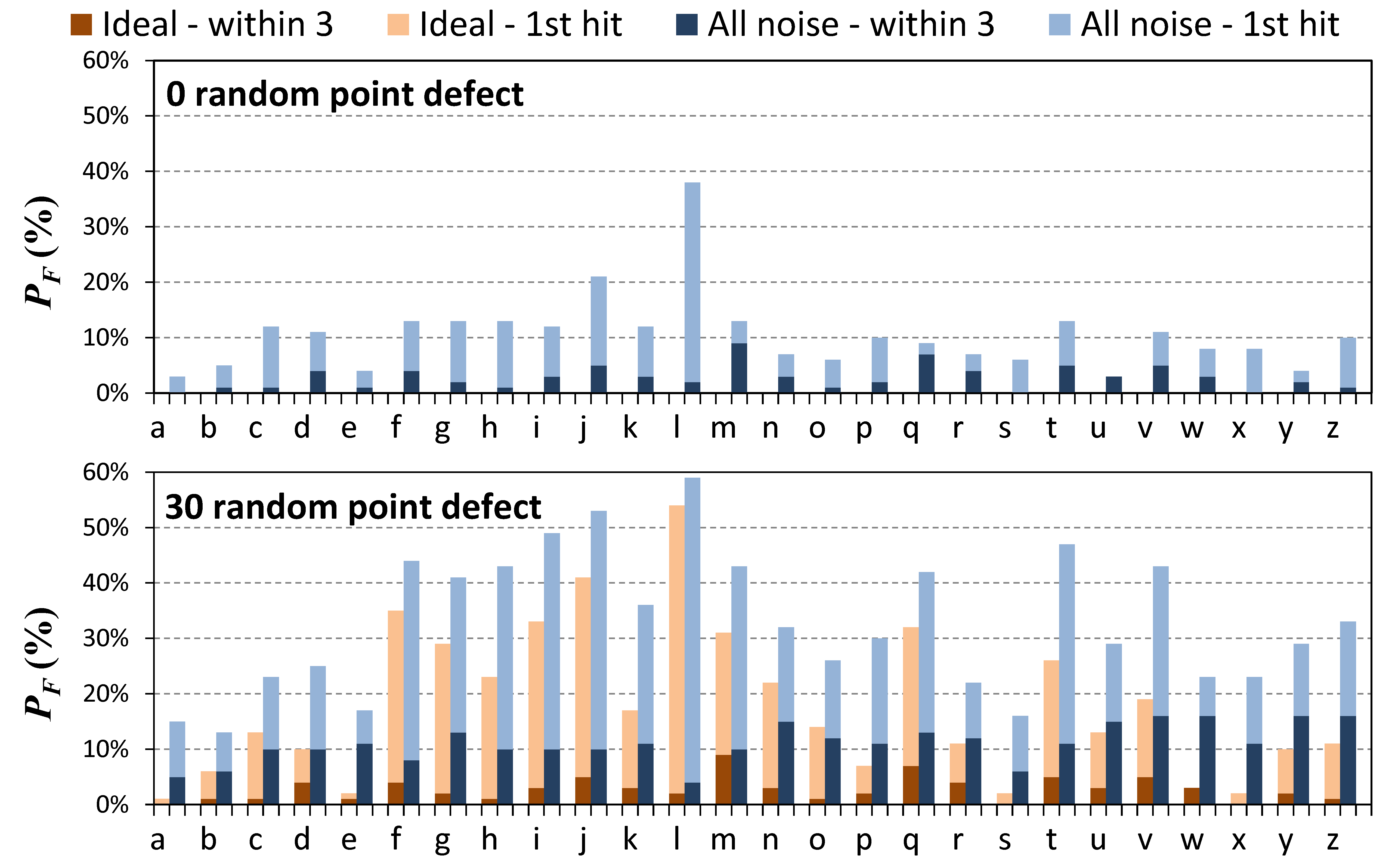

By mimicking the highly parallel biological systems, neuromorphic hardware provides the capability of information processing within a compact and energy-efficient platform. However, traditional Von Neumann architecture and the limited signal connections have severely constrained the scalability and performance of such hardware implementations. Recently, many research efforts have been investigated in utilizing the latest discovered memristors in neuromorphic systems due to the similarity of memristors to biological synapses. In this work, we explore the potential of a memristor crossbar array that functions as an auto-associative memory and apply it to Brain-State-in-a-Box (BSB) neural networks. More specifically, the recall and training functions of a multi-answer character recognition process based on BSB model are studied. The robustness of the BSB circuit is analyzed and evaluated based on extensive Monte-Carlo simulations, considering input defects, process variations, and electrical fluc-tuations. The results show that the hardware-based training scheme proposed in the work can alleviate and even cancel out majority of the noise issue.

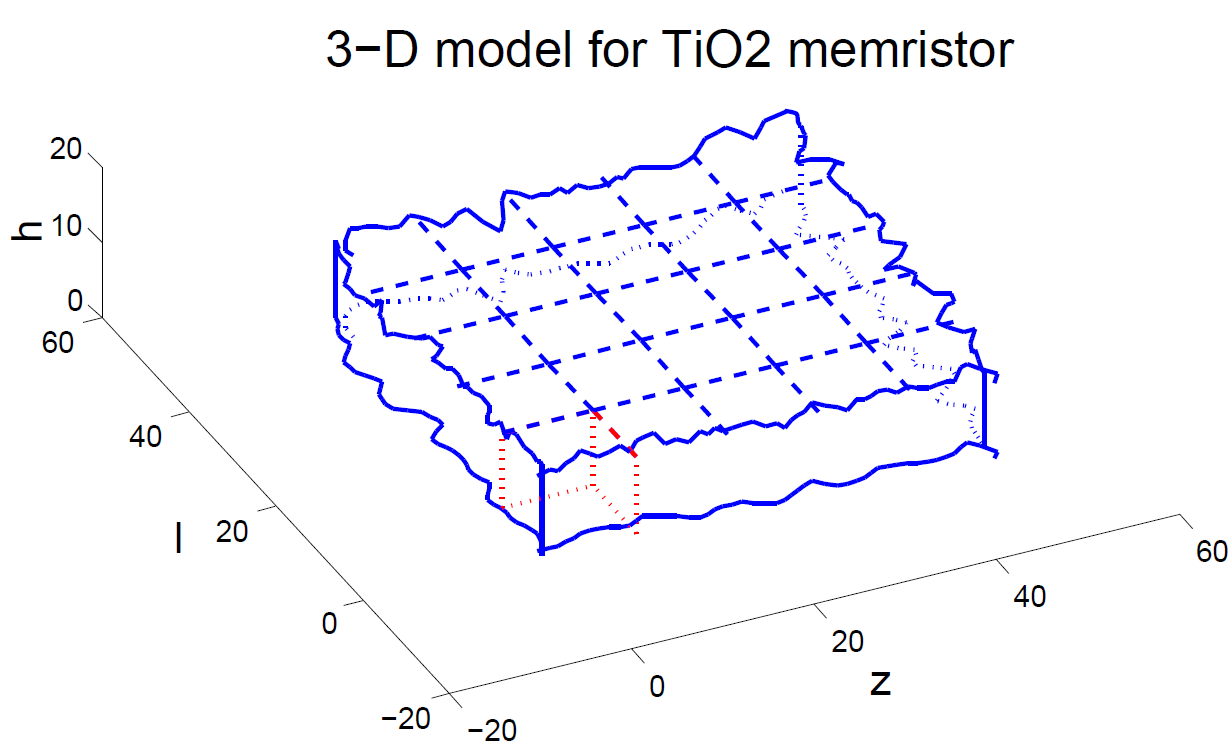

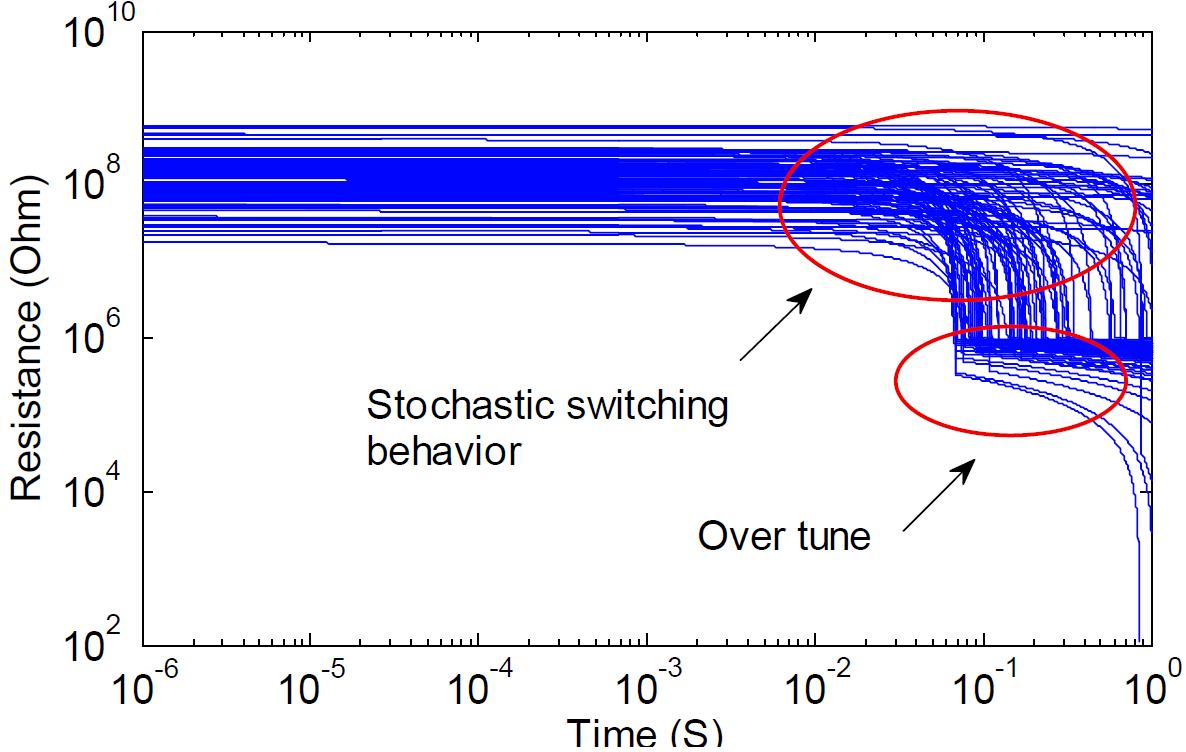

Memristor device modeling on process variation and stochastic behavior

Memristor, the fourth passive circuit element, has attracted increased attention since it was rediscovered by HP Lab in 2008. Its distinctive characteristic to record the historic profile of the voltage/current creates a great potential for future neuromorphic computing system design. However, at the nanoscale, process variation control in the manufacturing of memristor devices is very difficult. The impact of process variations on a memristive system that relies on the continuous (analog) states of the memristors could be significant. In addition, the stochastic switching behaviors have been widely observed. To facilitate the investigation on memristor-based hardware implementation, we compare and summarize different memristor modeling methodologies, from the simple static model, to statistical analysis by taking the impact of process variations into consideration, and the stochastic behavior model based on the real experimental measurements. In this work, we use the most popular TiO2 thin film device as an example to analyze the memristor's electrical properties. Our proposed modeling methodologies can be easily extended to the other structures/materials with necessary modifications.